Self-awareness is a broad concept borrowed from cognitive science and psychology that describes the property of a system, which has knowledge of itself, based on its own senses and internal models. This knowledge may take different forms, is based on perceptions of both internal and external phenomena, and is essential for being able to anticipate and adapt to unknown situations. Computational self-awareness methods comprise a new promising field that enables an autonomous agent to detect nonstationary conditions, to learn internal models of its environment, and to autonomously adapt its behavior and structure to the contextual tasks.

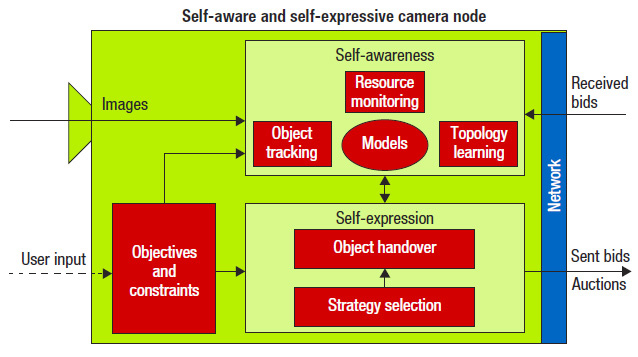

As partner in an European project, Bernhard Rinner’s research team and partners have proposed a computational framework that adopts the concept of self-awareness to more efficiently manage the complex tradeoffs among performance, flexibility, resources, and reliability. Our framework instantiates dedicated software components and their interactions to create a flexible self-aware and self-expressive multicamera tracking application.

The figure depicts the architecture of an individual camera node, which consists of six building blocks—each with its own specific objectives—that interact with one another in the network. Self-awareness (SA) is realized through the object tracking, resource monitoring, and topology learning blocks. Instead of relying on predefined knowledge and rules, these blocks utilize online learning and maintain models for the camera’s state and context. The models serve as input to self-expression (SE), which is composed of the object handover and the strategy selection blocks. Finally, the objectives and constraints block represents the camera’s objectives and resource constraints, both of which strongly influence the other building blocks.

Together with partners from the University of Genoa we have recently introduced a bio-inspired framework for generative and descriptive dynamic models that support SA in a computational and efficient way. Generative models facilitate predicting future states, while descriptive models enable the selection of the representation that best fits the current observation. Our framework is founded on the analysis and extension of three bio-inspired theories that studied SA from different viewpoints. We demonstrate how probabilistic techniques, such as cognitive dynamic Bayesian networks and generalized filtering paradigms, can learn appropriate models from multi-dimensional proprioceptive and exteroceptive signals acquired by an autonomous system.

Funded projects

- Doctoral School on Decision Making in a Digital Environment (DECIDE)

Co-principal investigator. Funding from University of Klagenfurt, 2019-2022 - Engineering Proprioception in Computing Systems (EPiCS)

WP leader. Funding from EU (FET PROACTIVE), 2010-2014

Selected publications

- Carlo Regazzoni, Lucio Marcenaro, Damian Campo and Bernhard Rinner. Multi-sensorial generative and descriptive self-awareness models for autonomous systems. Proceedings of the IEEE. 108(7):987-1010, 2020.

- Bernhard Rinner, Lukas Esterle, Jennifer Simonjan, Georg Nebehay, Roman Pflugfelder, Peter R. Lewis, Gustavo Fernandez Dominguez. Self-aware and Self-expressive Camera Networks. IEEE Computer, 48(7):21-28, July 2015.

- Peter R. Lewis, Lukas Esterle, Arjun Chandra, Bernhard Rinner, Jim Torresen, Xin Yao. Static, Dynamic and Adaptive Heterogeneity in Distributed Smart Camera Networks. ACM Transactions on Autonomous and Adaptive Systems, 10(2) pages 30, June 2015.

- Lukas Esterle, Peter R. Lewis, Xin Yao and Bernhard Rinner. Socio-Economic Vision Graph Generation and Handover in Distributed Smart Camera Networks. ACM Transactions on Sensor Networks, ACM, 10(2), pages 24, 2014.